I am currently a Ph.D. student in National University of Defense Technology (NUDT). I am supervised by Prof. Xinjun Mao and co-supervised by Prof. Yue Yu. I received the bachelor’s degree from Tsien Hsue-shen Class, NUDT, in June 2020. My research interests include AI/LLM4SE, code reuse, code snippet adaptation, and empirical software engineering.

🔥 News

- 2025.12: 🎉🎉 Our paper about Context Adaptation Bug Resolution was directly accepted by FSE 2026 (9.4%)! This is the first FSE paper in our group!

- 2025.09: 🎉🎉 Our paper about Code Adaptation Benchmark (AdaptEval) was accepted by ASE 2025 after major revision! This is the first ASE paper in our group!

- 2025.06: 🎉🎉 One paper accepted to ICSME 2025.

- 2025.04: 🎉🎉 Thrilled to announce our ICPC paper won 🏆ACM SIGSOFT Distinguished Paper Award!

- 2025.01: 🎉🎉 One paper was accepted by ICPC 2025.

- 2024.12: 🎉🎉 One paper was accepted by SANER 2025.

- 2024.10: 🎉🎉 Our paper about LLM-based Code Adaptation was directly accepted by ICSE 2025 (10.3%)! This is the first CCF-A SE conference paper in our group!

📝 Publications

Representative Works

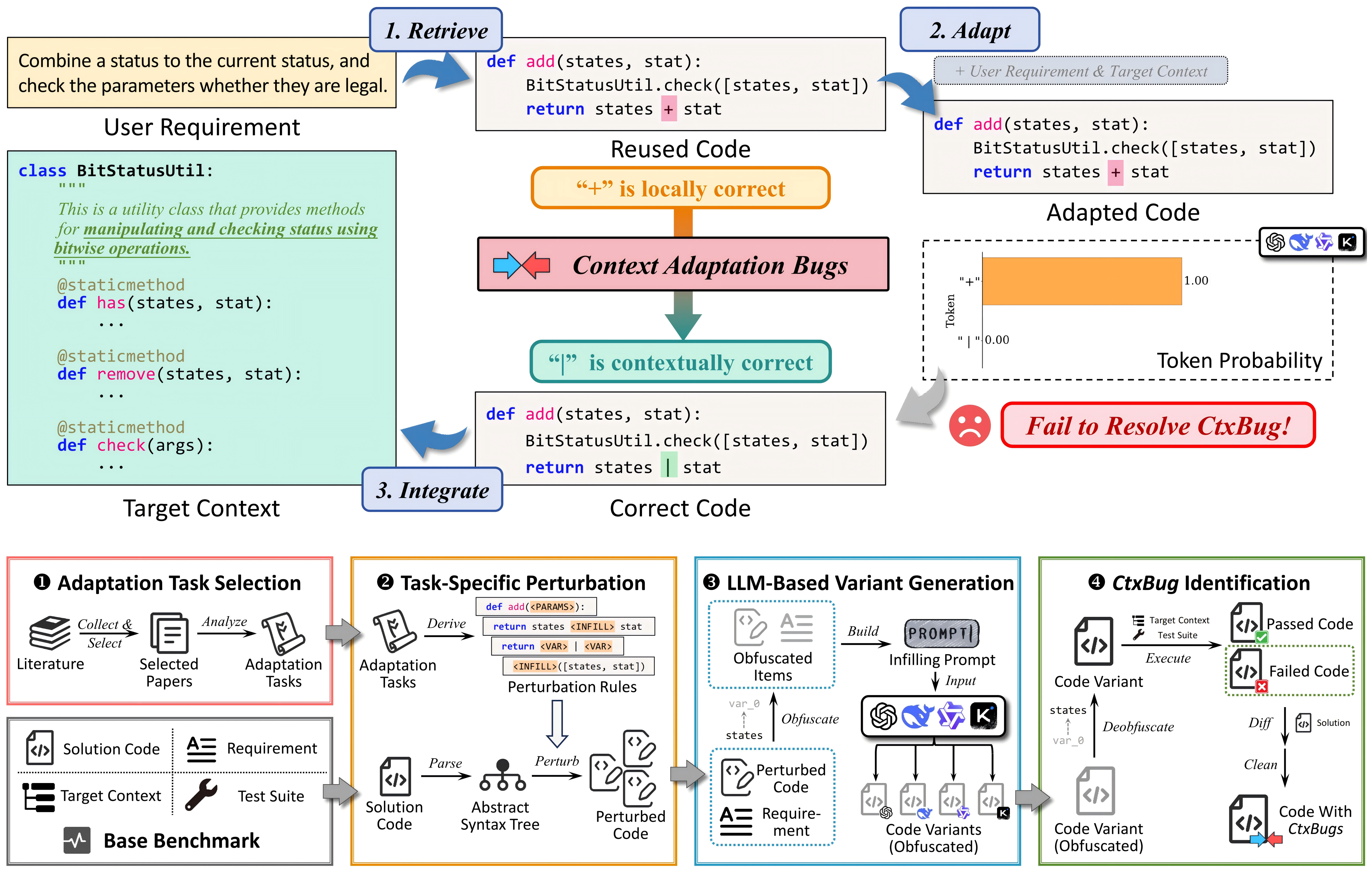

Coding in a Bubble? Evaluating LLMs in Resolving Context Adaptation Bugs During Code Adaptation

Tanghaoran Zhang, Xinjun Mao, Shangwen Wang, Yuxin Zhao, Yao Lu, Zezhou Tang, Wenyu Xu, Longfei Sun, Changrong Xie, Kang Yang and Yue Yu.

FSE 2026 (CCF-A)

- We propose CtxBugGen, a novel framework for generating CtxBugs through a four-step process: (1) Selection of four established context-aware adaptation tasks from the literature, (2) Perturbation via task-specific rules to induce CtxBugs from LLMs while ensuring their plausibility, (3) Generation of candidate variants by prompting LLMs without any context constraints and (4) Identification of valid CtxBugs through syntactic differencing and test execution in the target context. Based on the benchmark constructed by CtxBugGen, we conduct an empirical study with four state-of-the-art LLMs. Our results reveal their unsatisfactory performance in CtxBug resolution, highlighting their preference for local code correctness and critical weakness in cross-context reasoning.

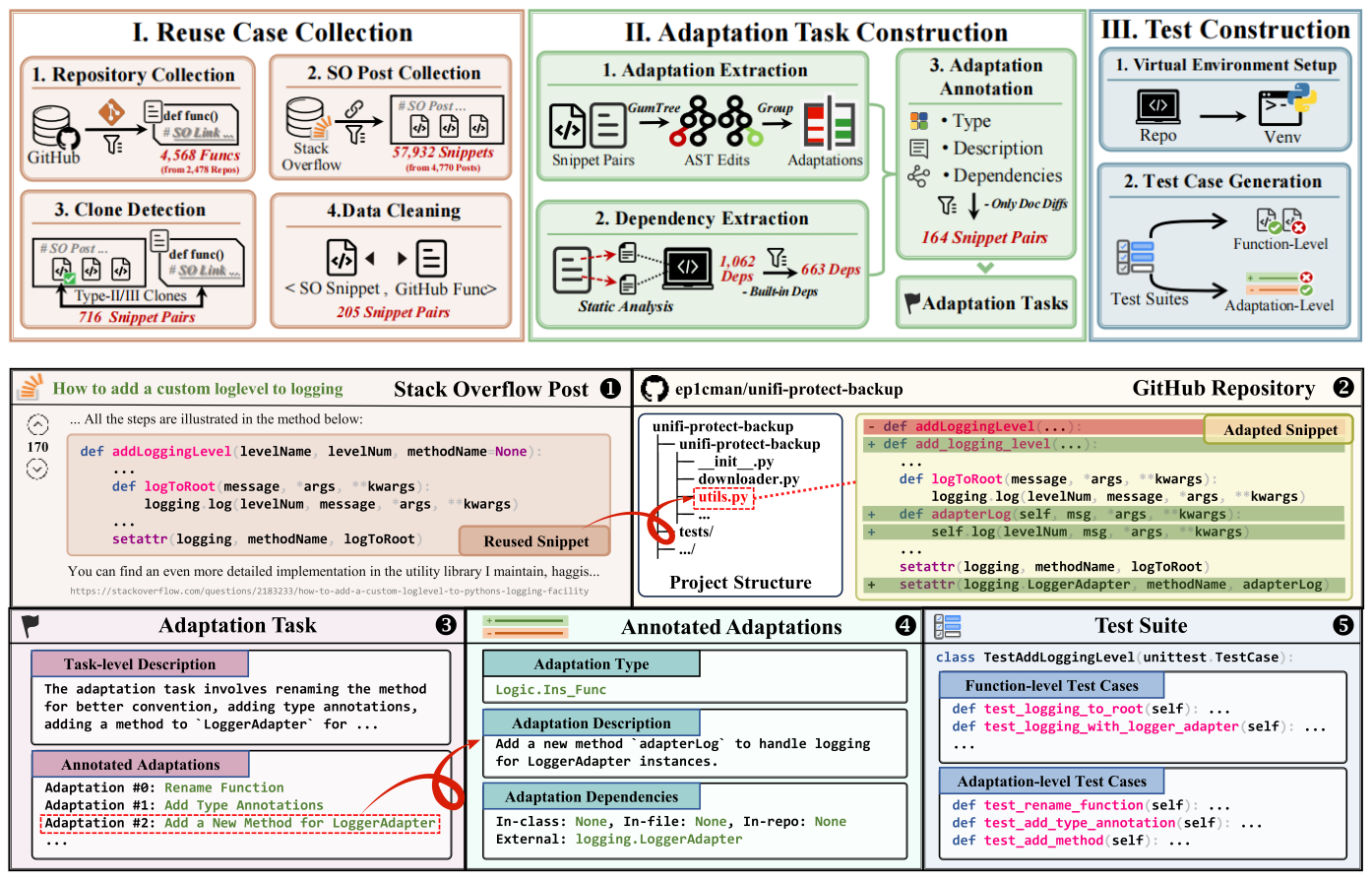

AdaptEval: A Benchmark for Evaluating Large Language Models on Code Snippet Adaptation

Tanghaoran Zhang, Xinjun Mao, Shangwen Wang, Yuxin Zhao, Yao Lu, Jin Zhang, Zhang Zhang, Kang Yang and Yue Yu.

ASE 2025 (CCF-A)

- We construct AdaptEval, the first benchmark for evaluating LLM-based code snippet adaptation. It incorporates three distinctive features: (1) practical context derived from developers’ practices, preserving rich contextual information from Stack Overflow and GitHub communities; (2) multi-granularity annotations supporting the evaluation of LLMs across diverse adaptation scenarios; (3) fine-grained evaluation implemented by a two-tier testing framework, which enables evaluating LLMs’ performance across various individual adaptations. Six instruction-tuned LLMs and three reasoning LLMs are evaluated.

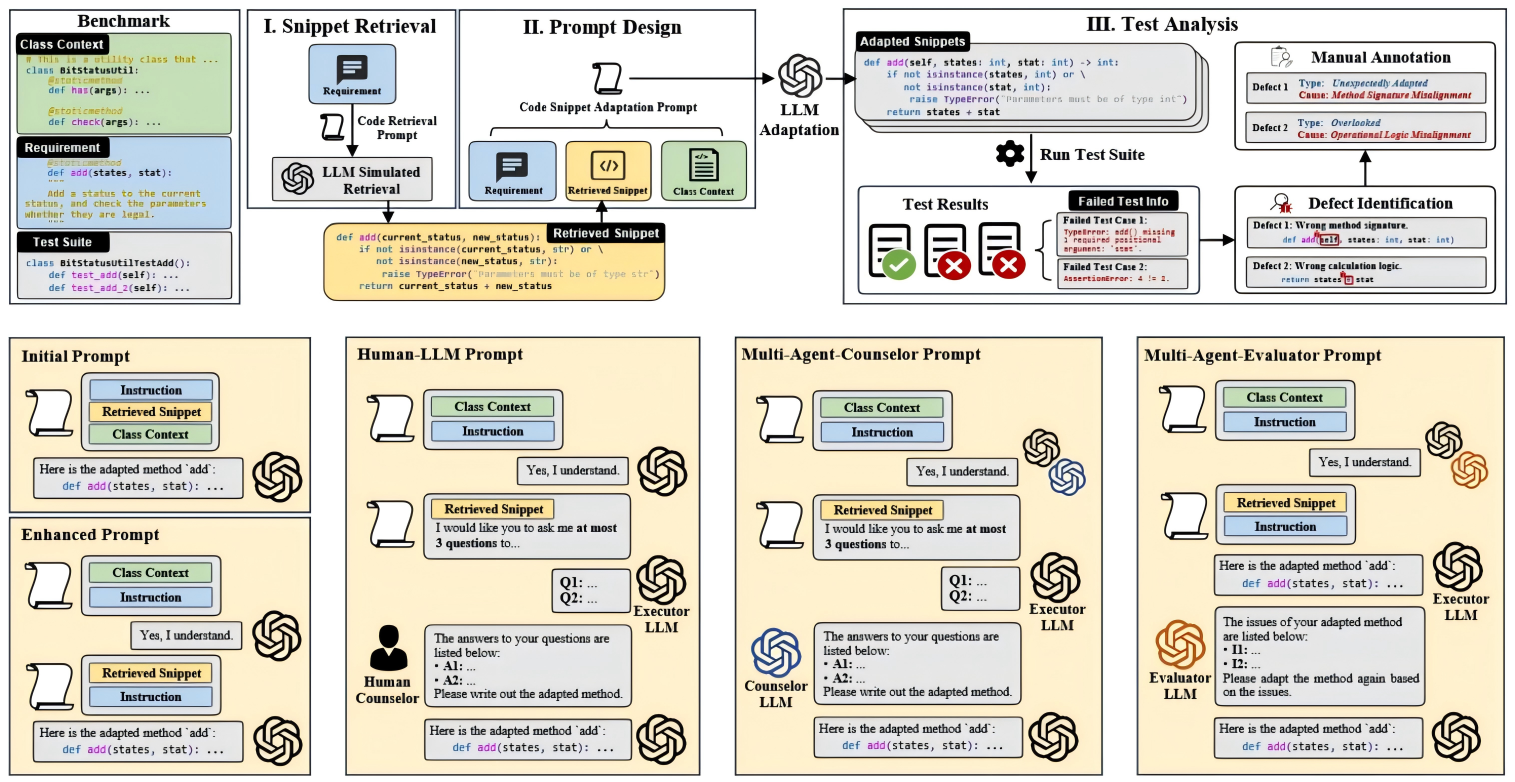

Tanghaoran Zhang, Yue Yu, Xinjun Mao, Shangwen Wang, Kang Yang, Yao Lu, Zhang Zhang and Yuxin Zhao.

ICSE 2025 (CCF-A)

- We first empirically investigate the capability of Large Language Models (LLMs) on the code adaptation task and find their sub-optimal performance are caused by three main reasons: (1) Unclear Requirement, (2) Requirement Misalignment and (3) Context Misapplication. To resolve above issues, we propose an interactive prompting approach to eliciting LLMs’ ability in code snippet adaptation.

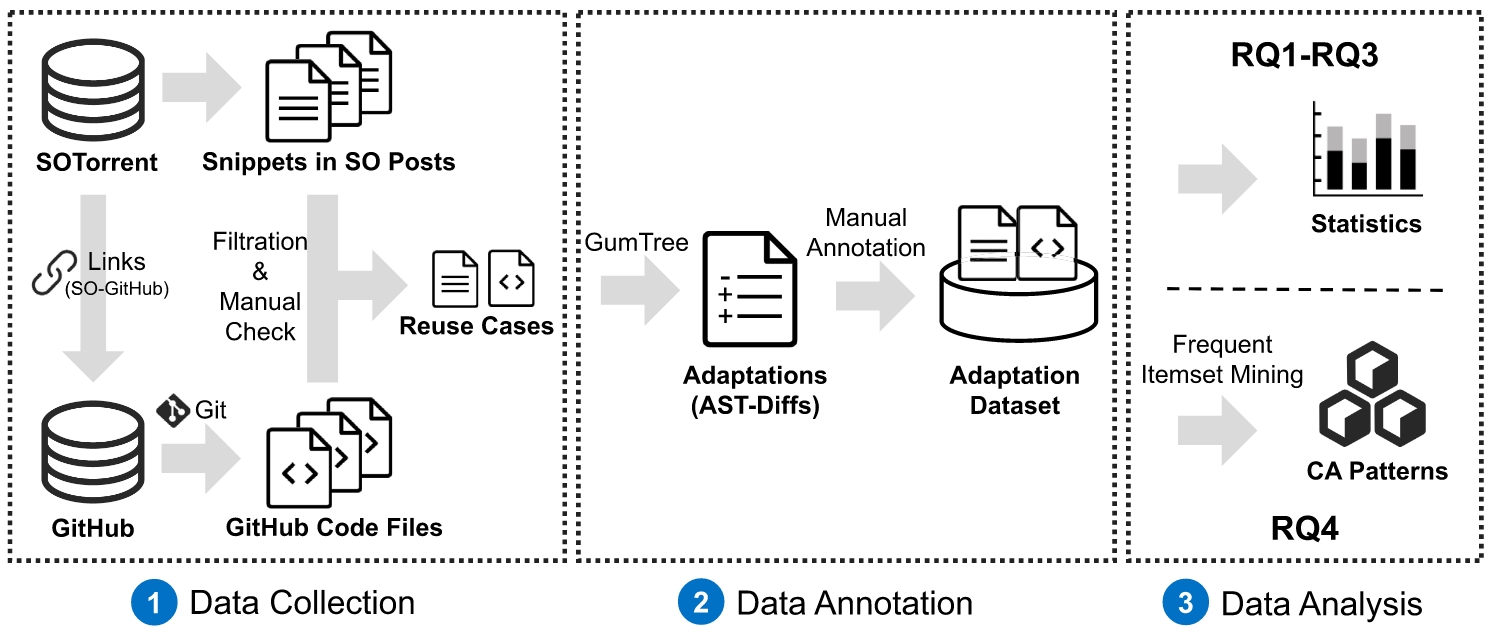

Tanghaoran Zhang, Yao Lu, Yue Yu, Xinjun Mao, Yang Zhang and Yuxin Zhao.

TSE (CCF-A, SCI-Q1), 2024

- We investigate how developers adapt code snippets to their project context based on a semi-structured interview and a quantitative study on 300 real-world adaptation cases. We point out current challenges in the adaptation practices and obtain four typical context-based adaptation patterns.

- FENSE: A Feature-based Ensemble Modeling Approach to Cross-project Just-in-time Defect Prediction, Tanghaoran Zhang, Yue Yu, Xinjun Mao, Yao Lu, Zhixing Li and Huaimin Wang, EMSE (CCF-B, JCR-Q1), 2022

All Publications

-

ConflictLens: An LLM-Based Method for Detecting Semantic Merge Conflicts, Longfei Sun, Yao Lu, Xinjun Mao, Tanghaoran Zhang, Zhang Zhang and Huiping Zhou, SEKE 2025 (CCF-C).

-

Understanding the Faults in Serverless Computing Based Applications: An Empirical Study, Changrong Xie, Yang Zhang, Xinjun Mao, Kang Yang and Tanghaoran Zhang, ICSME 2025 (CCF-B).

-

CARLDA: An Approach for Stack Overflow API Mention Recognition Driven by Context and LLM-based Data Augmentation, Zhang Zhang, Xinjun Mao, Shangwen Wang, Kang Yang, Tanghaoran Zhang and Yao Lu, JSEP (CCF-B), 2025.

-

Large Language Models are Qualified Benchmark Builders: Rebuilding Pre-Training Datasets for Advancing Code Intelligence Tasks, Kang Yang, Xinjun Mao, Shangwen Wang, Yanlin Wang, Tanghaoran Zhang, Bo Lin, Yihao Qin, Zhang Zhang, Yao Lu and Kamal Al-Sabahi, ICPC 2025 (CCF-B), 🏆ACM SIGSOFT Distinguished Paper Award.

-

Improving API Knowledge Comprehensibility: A Context-Dependent Entity Detection and Context Completion Approach Using LLM, Zhang Zhang, Xinjun Mao, Shangwen Wang, Kang Yang, Tanghaoran Zhang, Fei Gao and Xunhui Zhang, SANER 2025 (CCF-B).

-

An Empirical Study of Cross-Project Pull Request Recommendation in GitHub, Wenyu Xu, Yao Lu, Xunhui Zhang, Tanghaoran Zhang, Bo Lin and Xinjun Mao, APSEC 2024 (CCF-C).

-

MUSE: A Multi-Feature Semantic Fusion Method for ROS Node Search Based on Knowledge Graph, Yuxin Zhao, Xinjun Mao, Tanghaoran Zhang and Zhang Zhang, APSEC 2023 (CCF-C).

-

An Effective Method for Constructing Knowledge Graph to Search Reusable ROS Nodes, Yuxin Zhao, Xinjun Mao, Sun Bo, Tanghaoran Zhang and Shuo Yang, SEKE 2023 (CCF-C).

-

An Extensive Study of the Structure Features in Transformer-based Code Semantic Summarization, Kang Yang, Xinjun Mao, Shangwen Wang, Yihao Qin, Tanghaoran Zhang, Yao Lu and Kamal Al-Sabahi, ICPC 2023 (CCF-B).

-

Verifying ReLU Neural Networks from a Model Checking Perspective, Wanwei Liu, Fu Song, Tanghaoran Zhang and Ji Wang, JCST (CCF-B), 2020.

🎖 Honors and Awards

- 2025.11, 💰First-Prize Merit Scholarship, NUDT.

- 2025.04, 🏆ACM SIGSOFT Distinguished Paper Award in ICPC 2025.

- 2023.03, 💰Second-Prize Merit Scholarship, NUDT.

- 2020.05, 💰Qiangjun Scholarship, NUDT.

- 2019.10, 🏅Outstanding Winner of M* Modeling Contest.

- 2019.10, 🏆CCF Outstanding Undergraduate, CCF.

- 2019.05, 💰Yinhe Scholarship, College of Computer Science and Technology, NUDT.

- 2019.05, 🏅Meritorious Winner of MCM/ICM.

- 2018.05, 🏅Meritorious Winner of MCM/ICM.

📖 Educations

- 2020.09 - now, National University of Defense Technology (NUDT), Ph.D. Student in Software Engineering.

- 2016.09 - 2020.06, Tsien Hsue-shen Class (1/30), National University of Defense Technology (NUDT), B.E. in Software Engineering.

- 2010.09 - 2016.06, The High School Affiliated to Renmin University of China (RDFZ), Middle and High School.

⚙️ Services

- Program Committee

- ICSE’26 Shadow PC

- Reviewer

- EMSE, KAIS

- External Reviewer

- Journal: TSE, TOSEM, EMSE, JCST, JoS

- Conference: FSE’26, SANER’26, ICLR’25, ASE’24, ESEM’24

💬 Invited Talks

- 2025.11, ASE 2025 Paper Pre-conference Presentation | Video.

- 2024.12, ICSE 2025 Paper Pre-conference Presentation | Video.

💻 Internships

- 2025.03 - 2025.10, Visiting student at Peng Cheng Laboratory, Shenzhen, Guangdong.

- 2019.07 - 2019.10, Mitacs Research Internship, at SEAL in Queen’s University, Canada, supervised by Prof. Ying Zou.